Google announced two artificial intelligence models to help control robots and have them perform specific tasks like categorizing and organizing.

Gemini Robotics was described by Google as an advanced vision-language-action model built on Google's AI chatbot/language model Gemini 2.0. The company boasted physical actions as a new output modality for the purpose of controlling robots.

Gemini Robotics-ER, with "ER" meaning embodied reasoning, as Google explained in a press release, was developed for advanced spatial understanding and to enable roboticists to run their own programs.

The announcement touted the robots as being to perform a "wider range of real-world tasks" with both clamp-like robot arms and humanoid-type arms.

"To be useful and helpful to people, AI models for robotics need three principal qualities: they have to be general, meaning they’re able to adapt to different situations; they have to be interactive, meaning they can understand and respond quickly to instructions or changes in their environment," Google wrote.

The company added, "[Robots] have to be dexterous, meaning they can do the kinds of things people generally can do with their hands and fingers, like carefully manipulate objects."

Attached videos showed robots responding to verbal commends to organize fruit, pens, and other household items into different sections or bins. One robot was able to adapt to its environment even when the bins were moved.

Other short clips in the press release showcased the robot(s) playing cards or tic-tac-toe and packing food into a lunch bag.

The company went on, "Gemini Robotics leverages Gemini's world understanding to generalize to novel situations and solve a wide variety of tasks out of the box, including tasks it has never seen before in training."

"Gemini Robotics is also adept at dealing with new objects, diverse instructions, and new environments," Google added.

What they're not saying

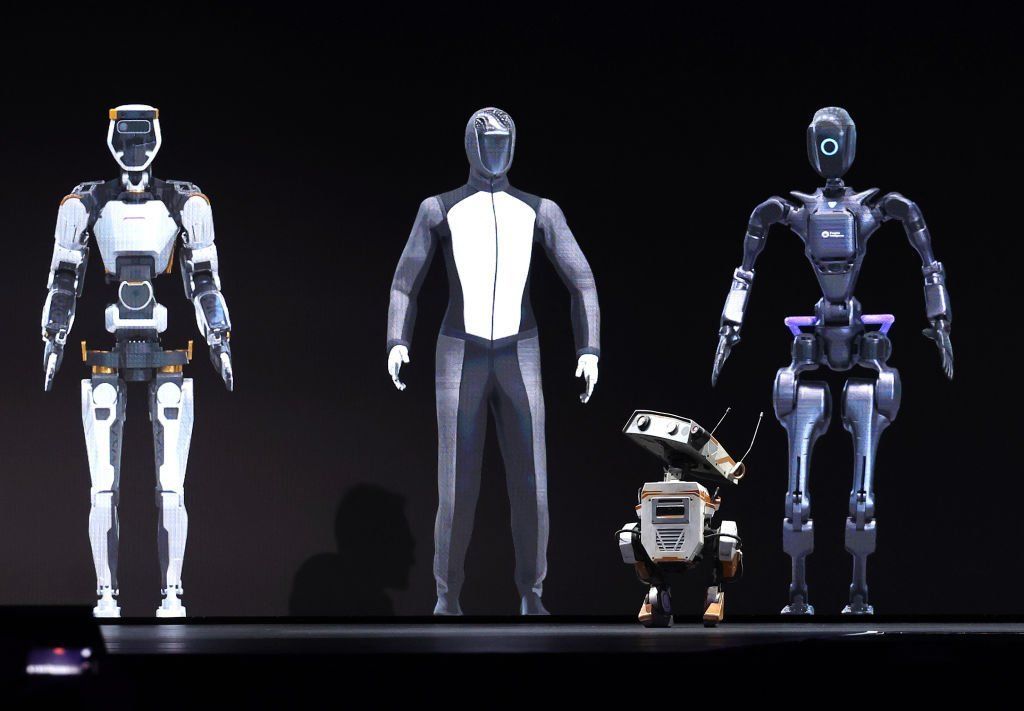

Telsa robots displayed similar capabilities near the start of 2024. Photo by John Ricky/Anadolu via Getty Images

Telsa robots displayed similar capabilities near the start of 2024. Photo by John Ricky/Anadolu via Getty Images

Google did not explain to the reader that this is not new technology, nor are the innovations particularly impressive given what is known about advanced robotics already.

In fact, it was mid-2023 when a group of scientists and robotics engineers at Princeton University showcased a robot that could learn an individual's cleaning habits and techniques to properly organize a home.

The bot could also throw out garbage, if necessary.

The "Tidybot" had users input text that described sample preferences to instruct the robot on where to place items. Examples like, "yellow shirts go in the drawer, dark purple shirts go in the closet," were used. The robot summarized these language models and supplemented its database with images found online that would allow it to compare the images with objects in the room in order to properly identify what exactly it was looking for.

The bot was able to fold laundry, put garbage in a bin, and organize clothes into different drawers.

About six or seven months later, Tesla revealed similar technology when it showed its robot, "Tesla Optimus," removing a T-shirt from a laundry basket before gently folding it on a table.

Essentially, Google appears to have connected its language model to existing technology to simply allow for speech-to-text commands for a robot, as opposed to entering commands through text solely.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

.png)

11 hours ago

2

11 hours ago

2

English (US)

English (US)